SCW Trust Agent: AI

Complete visibility and control over AI-generated code. Innovate fast and securely.

%201.png)

Improving Productivity,

But Increasing Risk

The widespread adoption of AI coding tools presents a new challenge: a lack of visibility and governance over AI-generated code.

78%

- StackOverflow

30%

- arXiv

50%

- BaxBench

The Benefits of Trust Agent: AI

The new AI capabilities of SCW Trust Agent provide the deep observability and control you need to confidently manage AI adoption in your software development lifecycle (SDLC) without sacrificing security.

The Challenge of AI in Your SDLC

Without a way to manage AI usage, CISOs, AppSec and engineering leaders are exposed to new risks and questions they can not answer. A few concerns include:

- Lack of visibility into which developers are using which models.

- Uncertainty around the security proficiency of developers using AI coding tools.

- No insights into what percentage of contributed code is AI-generated.

- Inability to enforce policy and governance to manage AI coding tool risk.

Our Solution –

Trust Agent: AI

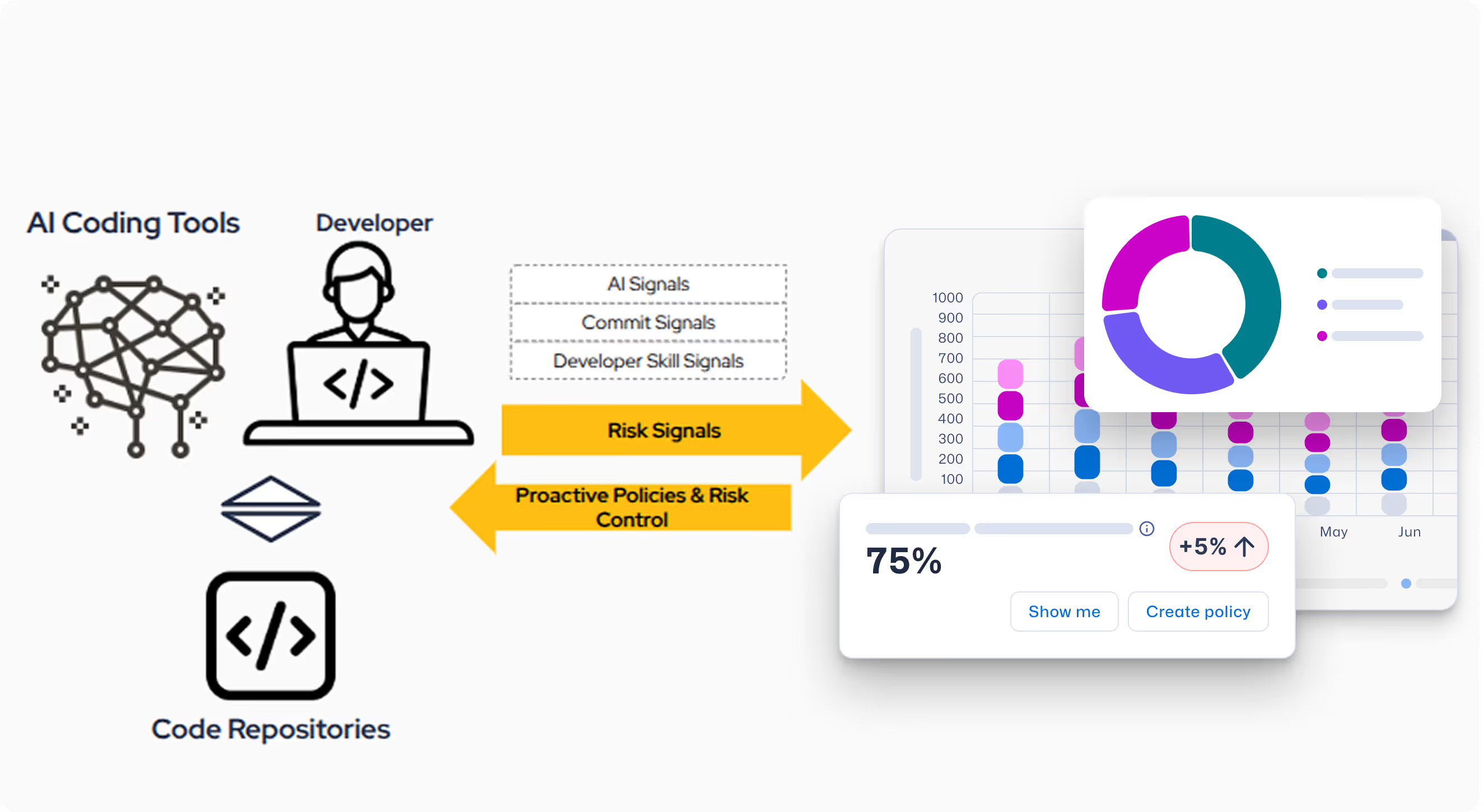

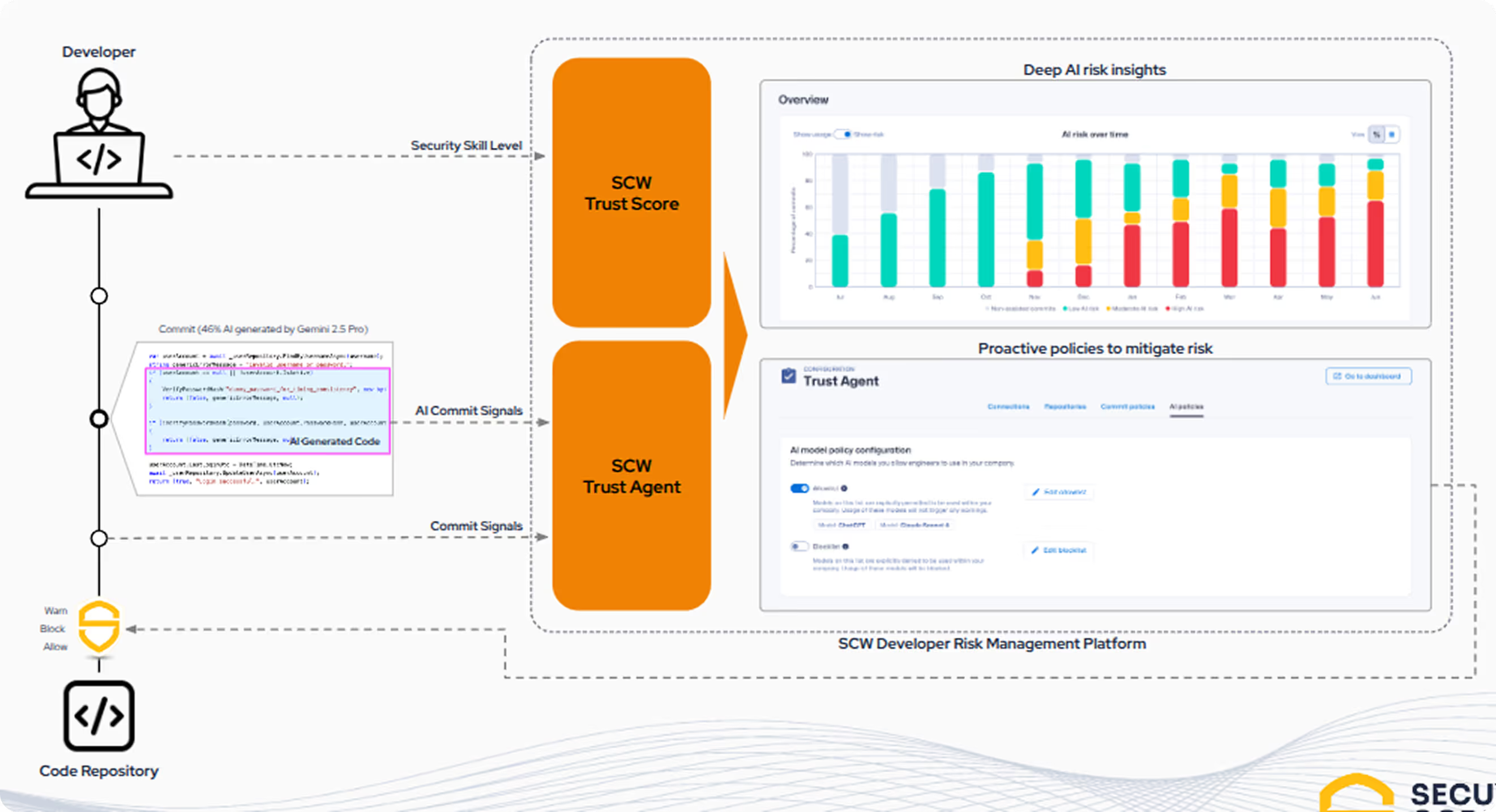

SCW empowers organizations to embrace the speed of AI-driven development without sacrificing security. Trust Agent: AI is the first solution to provide visibility and governance by correlating a unique combination of three key signals to understand AI-assisted developer risk at the commit level.

- AI coding tool usage: Insights into who is using what AI tools, which LLM models on which code repositories.

- Signals captured in real-time: Trust Agent: AI intercepts AI-generated code on the developer’s computer and IDE.

- Developer secure coding skills: We provide a clear understanding of a developer’s secure coding proficiency, which is the foundational skill required to use AI responsibly.

No more “shadow AI”

Get a full picture of AI coding assistants and agents, as well as the LLMs powering them. Discover unapproved tools and models.

Deep observability into commits

Gain deep visibility into AI-assisted software development, including which developers are using which LLM models and on which code bases.

Integrated Governance and Control

Connect AI-generated code to actual commits to understand the true security risk being introduced. Automate policy enforcement to ensure AI-enabled developers meet secure coding standards before their contributions are accepted.

Questions about Trust Agent: AI Insights

Why should I care about the risks of AI/LLM-generated code in my SDLC?

As developers increasingly leverage AI coding tools, a critical new layer of risk is being introduced into SDLCs. Surveys show that 78% of developers are now using these tools, yet studies reveal that as much as 50% of AI-generated code contains security flaws.

This lack of governance and a disconnect between developer knowledge and code quality can quickly spiral out of control, as each insecure AI-generated component adds to your organization's attack surface, complicating efforts to manage risk and maintain compliance.

Read more in this whitepaper: AI Coding Assistants: A Guide to Security-Safe Navigation for the Next Generation of Developers

What models and tools does Trust Agent: AI detect?

Trust Agent: AI collects signals from AI assistants and agentic coding tools such GitHub Copilot, Cline, Roo Code, etc. and the LLMs that power them.

Currently we detect all Models provided by OpenAI, Amazon Bedrock, Google Vertex AI and Github Copilot.

How is Trust Agent: AI installed?

We will provide you with a .vsix file for manual installation in Visual Studio Code, and automated deployment via mobile device management (MDM) scripts for Intune, Jamf, and Kanji will be coming soon.

How do I sign-up for Trust Agent: AI?

Trust Agent: AI is currently in closed Beta. If you are interested, please sign up for our early access waitlist.

Resources to get you started

Trust Agent: AI by Secure Code Warrior

This one-pager introduces SCW Trust Agent: AI, a new set of capabilities that provide deep observability and governance over AI coding tools. Learn how our solution uniquely correlates AI tool usage with developer skills to help you manage risk, optimize your SDLC, and ensure every line of AI-generated code is secure.

Vibe Coding: Practical Guide to Updating Your AppSec Strategy for AI

Watch on-demand to learn how to empower AppSec managers to become AI enablers, rather than blockers, through a practical, training-first approach. We'll show you how to leverage Secure Code Warrior (SCW) to strategically update your AppSec strategy for the age of AI coding assistants.

AI Coding Assistants: A Guide to Security-Safe Navigation for the Next Generation of Developers

Large language models deliver irresistible advantages in speed and productivity, but they also introduce undeniable risks to the enterprise. Traditional security guardrails aren’t enough to control the deluge. Developers require precise, verified security skills to identify and prevent security flaws at the outset of the software development lifecycle.

Resources to get you started

Trust Agent: AI by Secure Code Warrior

This one-pager introduces SCW Trust Agent: AI, a new set of capabilities that provide deep observability and governance over AI coding tools. Learn how our solution uniquely correlates AI tool usage with developer skills to help you manage risk, optimize your SDLC, and ensure every line of AI-generated code is secure.

.avif)

.avif)