Huawei security UK problems demonstrate the need for secure coding

Originally published in Information Age. This is an updated version that corrects positioning around Wind River Systems ongoing security support for their real-time operating system product, VxWorks.

A recent report from the UK's Huawei Cyber Security Evaluation Centre identified major security issues within Huawei's software engineering processes. While much of the news about this critical report is focused on unaddressed issues from the previous year, the more dangerous and overlooked problem is the clear lack of secure coding guidelines and practices employed by Huawei. But it's a problem that can be fixed.

The news, for Chinese telecom giant Huawei, keeps getting worse. While the United States has outright banned the company from future government work, the United Kingdom has been more accepting of the fact that many of the underlying flaws in Huawei's devices and code are fixable. The UK established the Huawei Cyber Security Evaluation Centre (HCSEC) in 2010 to evaluate and address security issues in Huawei products, and to produce an annual report about them. However, this year the report was especially damning.

Much of the focus on the 2019 HCSEC report in the news has been related to the fact that almost no security flaws from the previous year have been addressed. This includes the use of an older version of the VxWorks real-time operating system from Wind River that will soon be rendered end-of-life. Huawei has promised to fix that problem (and they will receive ongoing support from Wind River Systems), but it remains a core component in much of the UK's telecom infrastructure.

A critical factor that appears to have been overlooked by most of the mainstream press amounts to what could be a fundamentally broken process, existing within the company's development and deployment of new software and hardware. The report notes "significant technical issues'with the way that Huawei handles its internal engineering methods.

Let's look at some examples of those technical issues outlined in the report. It must be said that one of the best things that Huawei has done was to create secure coding guidelines to help their engineers and programmers deploy new code. Those guidelines cover a wide range of best practices, such as using known safe versions of system functions and processes from trusted libraries, and certainly not variants with any known vulnerabilities. That's a great thing in theory, but a real-world evaluation of a Huawei production system in the UK found that those guidelines were either never communicated to programmers, ignored by them or simply not enforced.

The report looked at specific memory-handling functions within public-facing applications, in this case a set of message boards where users were invited, as a function of the program, to add input. Given that user-input areas should never be treated as "trusted', it was expected that those areas would contain only secure code, as per the Huawei internal guidelines. Specifically, testers looked at the direct invocation of the memory-handling functions memcpy(), strcpy() and sprintf() within those production systems, known to potentially result into severe security issues like buffer overflow since 1988 .

Shockingly, there were 5,000 direct invocations of 17 known safe memcpy() functions, but also 600 uses of 12 unsafe variants. It was about the same ratio with the other functions. There were 1,400 safe strcpy() invocations but also 400 bad ones with known vulnerabilities. And 2,000 safe uses of sprintf() were found compared to 200 unsafe ones. While it's good that most of the uses of those functions were safe, that still leaves about 20% of the overall code vulnerable to known attacks. That is a huge threat surface attack area, and it also only takes into account direct invocations of the three memory handling functions, not any instances of their indirect use through function pointers. While the auditors only looked at those specific functions, it's unlikely that the three chosen memory handling functions are the only ones with problems.

While it's good that Huawei created a best practices guide for their programmers, it's clear that more needs to be done. It's one step to outline security expectations, but they're only effective if those guidelines are actively followed and familiar to the development cohort. Huawei could make significant strides in improving their security by making a commitment to train their programmers effectively, and not just skimming the basics on how to follow their internal Huawei guidelines. They must take the extra leap into demonstrating how to code more securely in general. Coders need to be sufficiently trained on good (secure) and bad (insecure) coding patterns and given the responsibility to practice what their company preaches, every single time.

Many of the specific coding problems outlined in the HCSEC report are addressed and enforced as part of the Secure Code Warrior platform, which trains programmers and cybersecurity teams to always deploy and maintain secure code. Concepts like never trusting user input, always pulling functions from established libraries, sanitising all input before passing it on to a server and many other safe coding practices are constantly demonstrated within the platform. We also look at highly specific vulnerabilities and shows, step by step, how to avoid and mitigate them.

In addition to skillful training, companies like Huawei could make use of DevSecOps solutions. It adds real-time coaching directly in the IDE, utilizing secure coding recipes that are customized to the security guidelines of the company, acting as the developer's sous-chef in the coding "kitchen'as they write their code. Such an approach could help Huawei programmers of all skill levels write better code and recognise potential vulnerabilities, while also allowing Huawei's security experts to create a "cookbook'of recipes that adhere to their policies and help execute commands.

A core lesson from Huawei's troubles should be that creating secure coding guidelines is meaningless if programmers don't know about them, or simply don't know how to follow good coding practices. In this case, the internal best practices guidelines turned out to be Huawei's own zhilaohu; what the West would call "a paper tiger'. It was a document with a lot of style, but no substance. To give it real teeth would require the right practical tools and an actual training program, one that takes a hands-on approach and builds continuous knowledge and skill.

A recent report from the UK's Huawei Cyber Security Evaluation Centre identified major security issues within Huawei's software engineering processes. But it's a problem that can be fixed.

Chief Executive Officer, Chairman, and Co-Founder

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoChief Executive Officer, Chairman, and Co-Founder

Pieter Danhieux is a globally recognized security expert, with over 12 years experience as a security consultant and 8 years as a Principal Instructor for SANS teaching offensive techniques on how to target and assess organizations, systems and individuals for security weaknesses. In 2016, he was recognized as one of the Coolest Tech people in Australia (Business Insider), awarded Cyber Security Professional of the Year (AISA - Australian Information Security Association) and holds GSE, CISSP, GCIH, GCFA, GSEC, GPEN, GWAPT, GCIA certifications.

Originally published in Information Age. This is an updated version that corrects positioning around Wind River Systems ongoing security support for their real-time operating system product, VxWorks.

A recent report from the UK's Huawei Cyber Security Evaluation Centre identified major security issues within Huawei's software engineering processes. While much of the news about this critical report is focused on unaddressed issues from the previous year, the more dangerous and overlooked problem is the clear lack of secure coding guidelines and practices employed by Huawei. But it's a problem that can be fixed.

The news, for Chinese telecom giant Huawei, keeps getting worse. While the United States has outright banned the company from future government work, the United Kingdom has been more accepting of the fact that many of the underlying flaws in Huawei's devices and code are fixable. The UK established the Huawei Cyber Security Evaluation Centre (HCSEC) in 2010 to evaluate and address security issues in Huawei products, and to produce an annual report about them. However, this year the report was especially damning.

Much of the focus on the 2019 HCSEC report in the news has been related to the fact that almost no security flaws from the previous year have been addressed. This includes the use of an older version of the VxWorks real-time operating system from Wind River that will soon be rendered end-of-life. Huawei has promised to fix that problem (and they will receive ongoing support from Wind River Systems), but it remains a core component in much of the UK's telecom infrastructure.

A critical factor that appears to have been overlooked by most of the mainstream press amounts to what could be a fundamentally broken process, existing within the company's development and deployment of new software and hardware. The report notes "significant technical issues'with the way that Huawei handles its internal engineering methods.

Let's look at some examples of those technical issues outlined in the report. It must be said that one of the best things that Huawei has done was to create secure coding guidelines to help their engineers and programmers deploy new code. Those guidelines cover a wide range of best practices, such as using known safe versions of system functions and processes from trusted libraries, and certainly not variants with any known vulnerabilities. That's a great thing in theory, but a real-world evaluation of a Huawei production system in the UK found that those guidelines were either never communicated to programmers, ignored by them or simply not enforced.

The report looked at specific memory-handling functions within public-facing applications, in this case a set of message boards where users were invited, as a function of the program, to add input. Given that user-input areas should never be treated as "trusted', it was expected that those areas would contain only secure code, as per the Huawei internal guidelines. Specifically, testers looked at the direct invocation of the memory-handling functions memcpy(), strcpy() and sprintf() within those production systems, known to potentially result into severe security issues like buffer overflow since 1988 .

Shockingly, there were 5,000 direct invocations of 17 known safe memcpy() functions, but also 600 uses of 12 unsafe variants. It was about the same ratio with the other functions. There were 1,400 safe strcpy() invocations but also 400 bad ones with known vulnerabilities. And 2,000 safe uses of sprintf() were found compared to 200 unsafe ones. While it's good that most of the uses of those functions were safe, that still leaves about 20% of the overall code vulnerable to known attacks. That is a huge threat surface attack area, and it also only takes into account direct invocations of the three memory handling functions, not any instances of their indirect use through function pointers. While the auditors only looked at those specific functions, it's unlikely that the three chosen memory handling functions are the only ones with problems.

While it's good that Huawei created a best practices guide for their programmers, it's clear that more needs to be done. It's one step to outline security expectations, but they're only effective if those guidelines are actively followed and familiar to the development cohort. Huawei could make significant strides in improving their security by making a commitment to train their programmers effectively, and not just skimming the basics on how to follow their internal Huawei guidelines. They must take the extra leap into demonstrating how to code more securely in general. Coders need to be sufficiently trained on good (secure) and bad (insecure) coding patterns and given the responsibility to practice what their company preaches, every single time.

Many of the specific coding problems outlined in the HCSEC report are addressed and enforced as part of the Secure Code Warrior platform, which trains programmers and cybersecurity teams to always deploy and maintain secure code. Concepts like never trusting user input, always pulling functions from established libraries, sanitising all input before passing it on to a server and many other safe coding practices are constantly demonstrated within the platform. We also look at highly specific vulnerabilities and shows, step by step, how to avoid and mitigate them.

In addition to skillful training, companies like Huawei could make use of DevSecOps solutions. It adds real-time coaching directly in the IDE, utilizing secure coding recipes that are customized to the security guidelines of the company, acting as the developer's sous-chef in the coding "kitchen'as they write their code. Such an approach could help Huawei programmers of all skill levels write better code and recognise potential vulnerabilities, while also allowing Huawei's security experts to create a "cookbook'of recipes that adhere to their policies and help execute commands.

A core lesson from Huawei's troubles should be that creating secure coding guidelines is meaningless if programmers don't know about them, or simply don't know how to follow good coding practices. In this case, the internal best practices guidelines turned out to be Huawei's own zhilaohu; what the West would call "a paper tiger'. It was a document with a lot of style, but no substance. To give it real teeth would require the right practical tools and an actual training program, one that takes a hands-on approach and builds continuous knowledge and skill.

Originally published in Information Age. This is an updated version that corrects positioning around Wind River Systems ongoing security support for their real-time operating system product, VxWorks.

A recent report from the UK's Huawei Cyber Security Evaluation Centre identified major security issues within Huawei's software engineering processes. While much of the news about this critical report is focused on unaddressed issues from the previous year, the more dangerous and overlooked problem is the clear lack of secure coding guidelines and practices employed by Huawei. But it's a problem that can be fixed.

The news, for Chinese telecom giant Huawei, keeps getting worse. While the United States has outright banned the company from future government work, the United Kingdom has been more accepting of the fact that many of the underlying flaws in Huawei's devices and code are fixable. The UK established the Huawei Cyber Security Evaluation Centre (HCSEC) in 2010 to evaluate and address security issues in Huawei products, and to produce an annual report about them. However, this year the report was especially damning.

Much of the focus on the 2019 HCSEC report in the news has been related to the fact that almost no security flaws from the previous year have been addressed. This includes the use of an older version of the VxWorks real-time operating system from Wind River that will soon be rendered end-of-life. Huawei has promised to fix that problem (and they will receive ongoing support from Wind River Systems), but it remains a core component in much of the UK's telecom infrastructure.

A critical factor that appears to have been overlooked by most of the mainstream press amounts to what could be a fundamentally broken process, existing within the company's development and deployment of new software and hardware. The report notes "significant technical issues'with the way that Huawei handles its internal engineering methods.

Let's look at some examples of those technical issues outlined in the report. It must be said that one of the best things that Huawei has done was to create secure coding guidelines to help their engineers and programmers deploy new code. Those guidelines cover a wide range of best practices, such as using known safe versions of system functions and processes from trusted libraries, and certainly not variants with any known vulnerabilities. That's a great thing in theory, but a real-world evaluation of a Huawei production system in the UK found that those guidelines were either never communicated to programmers, ignored by them or simply not enforced.

The report looked at specific memory-handling functions within public-facing applications, in this case a set of message boards where users were invited, as a function of the program, to add input. Given that user-input areas should never be treated as "trusted', it was expected that those areas would contain only secure code, as per the Huawei internal guidelines. Specifically, testers looked at the direct invocation of the memory-handling functions memcpy(), strcpy() and sprintf() within those production systems, known to potentially result into severe security issues like buffer overflow since 1988 .

Shockingly, there were 5,000 direct invocations of 17 known safe memcpy() functions, but also 600 uses of 12 unsafe variants. It was about the same ratio with the other functions. There were 1,400 safe strcpy() invocations but also 400 bad ones with known vulnerabilities. And 2,000 safe uses of sprintf() were found compared to 200 unsafe ones. While it's good that most of the uses of those functions were safe, that still leaves about 20% of the overall code vulnerable to known attacks. That is a huge threat surface attack area, and it also only takes into account direct invocations of the three memory handling functions, not any instances of their indirect use through function pointers. While the auditors only looked at those specific functions, it's unlikely that the three chosen memory handling functions are the only ones with problems.

While it's good that Huawei created a best practices guide for their programmers, it's clear that more needs to be done. It's one step to outline security expectations, but they're only effective if those guidelines are actively followed and familiar to the development cohort. Huawei could make significant strides in improving their security by making a commitment to train their programmers effectively, and not just skimming the basics on how to follow their internal Huawei guidelines. They must take the extra leap into demonstrating how to code more securely in general. Coders need to be sufficiently trained on good (secure) and bad (insecure) coding patterns and given the responsibility to practice what their company preaches, every single time.

Many of the specific coding problems outlined in the HCSEC report are addressed and enforced as part of the Secure Code Warrior platform, which trains programmers and cybersecurity teams to always deploy and maintain secure code. Concepts like never trusting user input, always pulling functions from established libraries, sanitising all input before passing it on to a server and many other safe coding practices are constantly demonstrated within the platform. We also look at highly specific vulnerabilities and shows, step by step, how to avoid and mitigate them.

In addition to skillful training, companies like Huawei could make use of DevSecOps solutions. It adds real-time coaching directly in the IDE, utilizing secure coding recipes that are customized to the security guidelines of the company, acting as the developer's sous-chef in the coding "kitchen'as they write their code. Such an approach could help Huawei programmers of all skill levels write better code and recognise potential vulnerabilities, while also allowing Huawei's security experts to create a "cookbook'of recipes that adhere to their policies and help execute commands.

A core lesson from Huawei's troubles should be that creating secure coding guidelines is meaningless if programmers don't know about them, or simply don't know how to follow good coding practices. In this case, the internal best practices guidelines turned out to be Huawei's own zhilaohu; what the West would call "a paper tiger'. It was a document with a lot of style, but no substance. To give it real teeth would require the right practical tools and an actual training program, one that takes a hands-on approach and builds continuous knowledge and skill.

Click on the link below and download the PDF of this resource.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

View reportBook a demoChief Executive Officer, Chairman, and Co-Founder

Pieter Danhieux is a globally recognized security expert, with over 12 years experience as a security consultant and 8 years as a Principal Instructor for SANS teaching offensive techniques on how to target and assess organizations, systems and individuals for security weaknesses. In 2016, he was recognized as one of the Coolest Tech people in Australia (Business Insider), awarded Cyber Security Professional of the Year (AISA - Australian Information Security Association) and holds GSE, CISSP, GCIH, GCFA, GSEC, GPEN, GWAPT, GCIA certifications.

Originally published in Information Age. This is an updated version that corrects positioning around Wind River Systems ongoing security support for their real-time operating system product, VxWorks.

A recent report from the UK's Huawei Cyber Security Evaluation Centre identified major security issues within Huawei's software engineering processes. While much of the news about this critical report is focused on unaddressed issues from the previous year, the more dangerous and overlooked problem is the clear lack of secure coding guidelines and practices employed by Huawei. But it's a problem that can be fixed.

The news, for Chinese telecom giant Huawei, keeps getting worse. While the United States has outright banned the company from future government work, the United Kingdom has been more accepting of the fact that many of the underlying flaws in Huawei's devices and code are fixable. The UK established the Huawei Cyber Security Evaluation Centre (HCSEC) in 2010 to evaluate and address security issues in Huawei products, and to produce an annual report about them. However, this year the report was especially damning.

Much of the focus on the 2019 HCSEC report in the news has been related to the fact that almost no security flaws from the previous year have been addressed. This includes the use of an older version of the VxWorks real-time operating system from Wind River that will soon be rendered end-of-life. Huawei has promised to fix that problem (and they will receive ongoing support from Wind River Systems), but it remains a core component in much of the UK's telecom infrastructure.

A critical factor that appears to have been overlooked by most of the mainstream press amounts to what could be a fundamentally broken process, existing within the company's development and deployment of new software and hardware. The report notes "significant technical issues'with the way that Huawei handles its internal engineering methods.

Let's look at some examples of those technical issues outlined in the report. It must be said that one of the best things that Huawei has done was to create secure coding guidelines to help their engineers and programmers deploy new code. Those guidelines cover a wide range of best practices, such as using known safe versions of system functions and processes from trusted libraries, and certainly not variants with any known vulnerabilities. That's a great thing in theory, but a real-world evaluation of a Huawei production system in the UK found that those guidelines were either never communicated to programmers, ignored by them or simply not enforced.

The report looked at specific memory-handling functions within public-facing applications, in this case a set of message boards where users were invited, as a function of the program, to add input. Given that user-input areas should never be treated as "trusted', it was expected that those areas would contain only secure code, as per the Huawei internal guidelines. Specifically, testers looked at the direct invocation of the memory-handling functions memcpy(), strcpy() and sprintf() within those production systems, known to potentially result into severe security issues like buffer overflow since 1988 .

Shockingly, there were 5,000 direct invocations of 17 known safe memcpy() functions, but also 600 uses of 12 unsafe variants. It was about the same ratio with the other functions. There were 1,400 safe strcpy() invocations but also 400 bad ones with known vulnerabilities. And 2,000 safe uses of sprintf() were found compared to 200 unsafe ones. While it's good that most of the uses of those functions were safe, that still leaves about 20% of the overall code vulnerable to known attacks. That is a huge threat surface attack area, and it also only takes into account direct invocations of the three memory handling functions, not any instances of their indirect use through function pointers. While the auditors only looked at those specific functions, it's unlikely that the three chosen memory handling functions are the only ones with problems.

While it's good that Huawei created a best practices guide for their programmers, it's clear that more needs to be done. It's one step to outline security expectations, but they're only effective if those guidelines are actively followed and familiar to the development cohort. Huawei could make significant strides in improving their security by making a commitment to train their programmers effectively, and not just skimming the basics on how to follow their internal Huawei guidelines. They must take the extra leap into demonstrating how to code more securely in general. Coders need to be sufficiently trained on good (secure) and bad (insecure) coding patterns and given the responsibility to practice what their company preaches, every single time.

Many of the specific coding problems outlined in the HCSEC report are addressed and enforced as part of the Secure Code Warrior platform, which trains programmers and cybersecurity teams to always deploy and maintain secure code. Concepts like never trusting user input, always pulling functions from established libraries, sanitising all input before passing it on to a server and many other safe coding practices are constantly demonstrated within the platform. We also look at highly specific vulnerabilities and shows, step by step, how to avoid and mitigate them.

In addition to skillful training, companies like Huawei could make use of DevSecOps solutions. It adds real-time coaching directly in the IDE, utilizing secure coding recipes that are customized to the security guidelines of the company, acting as the developer's sous-chef in the coding "kitchen'as they write their code. Such an approach could help Huawei programmers of all skill levels write better code and recognise potential vulnerabilities, while also allowing Huawei's security experts to create a "cookbook'of recipes that adhere to their policies and help execute commands.

A core lesson from Huawei's troubles should be that creating secure coding guidelines is meaningless if programmers don't know about them, or simply don't know how to follow good coding practices. In this case, the internal best practices guidelines turned out to be Huawei's own zhilaohu; what the West would call "a paper tiger'. It was a document with a lot of style, but no substance. To give it real teeth would require the right practical tools and an actual training program, one that takes a hands-on approach and builds continuous knowledge and skill.

Table of contents

Chief Executive Officer, Chairman, and Co-Founder

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoDownloadResources to get you started

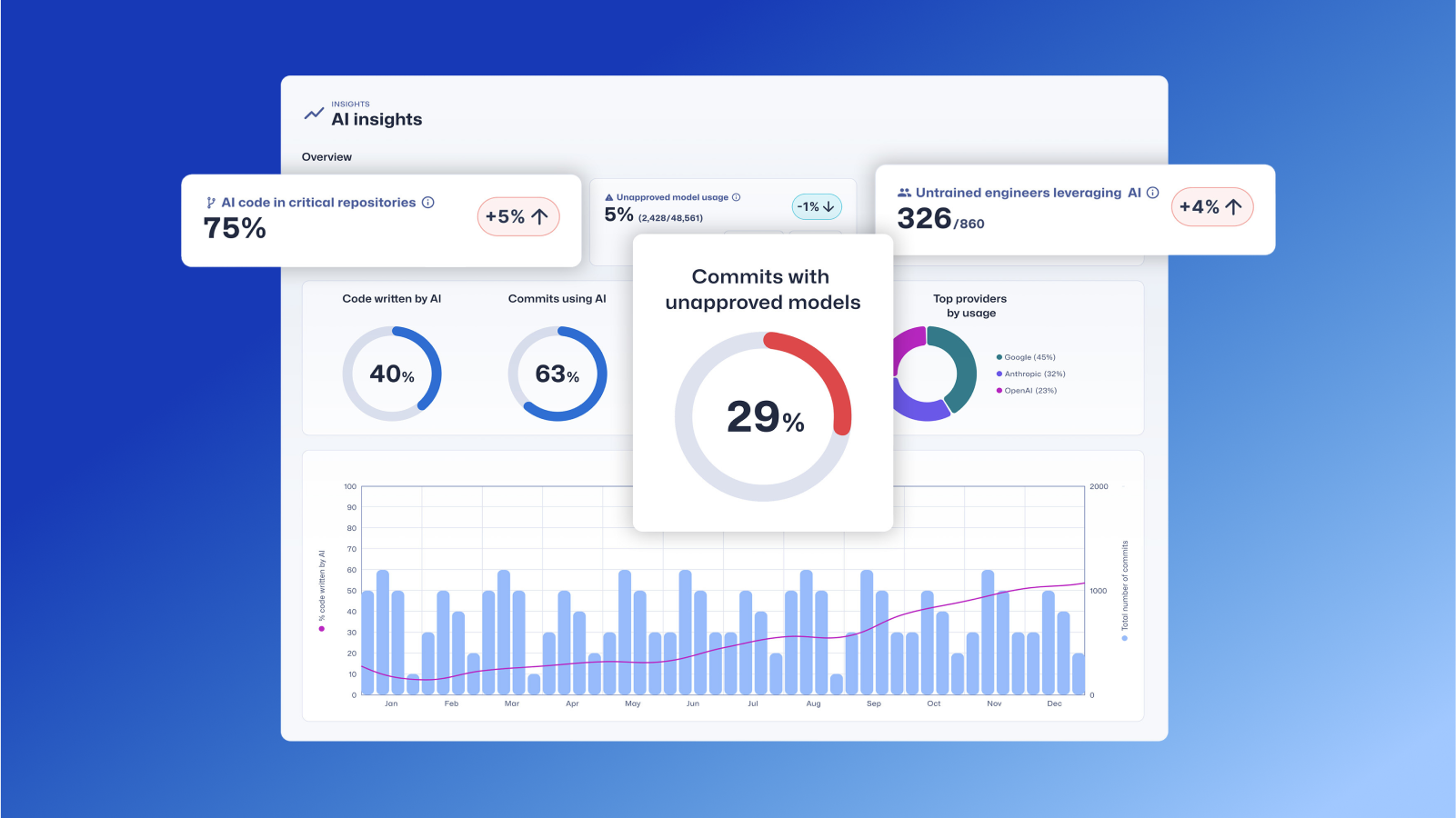

Trust Agent: AI by Secure Code Warrior

This one-pager introduces SCW Trust Agent: AI, a new set of capabilities that provide deep observability and governance over AI coding tools. Learn how our solution uniquely correlates AI tool usage with developer skills to help you manage risk, optimize your SDLC, and ensure every line of AI-generated code is secure.

Vibe Coding: Practical Guide to Updating Your AppSec Strategy for AI

Watch on-demand to learn how to empower AppSec managers to become AI enablers, rather than blockers, through a practical, training-first approach. We'll show you how to leverage Secure Code Warrior (SCW) to strategically update your AppSec strategy for the age of AI coding assistants.

.png)

.avif)

.avif)